| NAVIRE |

Virtual Navigation in Image-Based Representations of Real World

Environments

| NAVIRE |

Virtual Navigation in Image-Based Representations of Real World

Environments

|

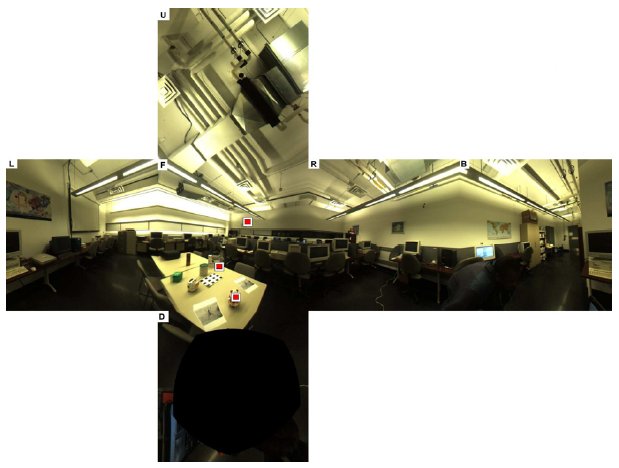

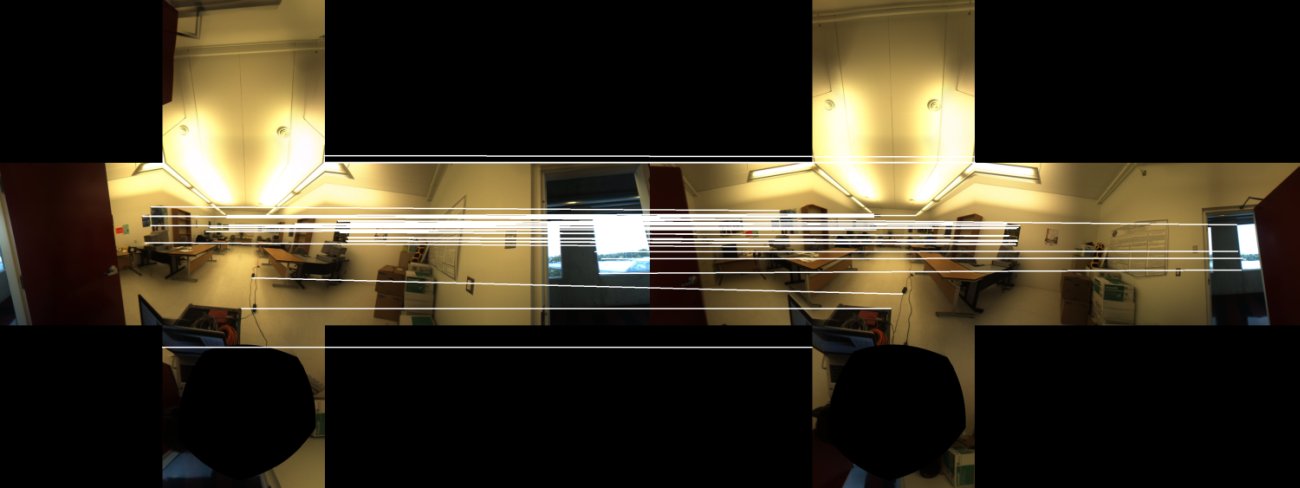

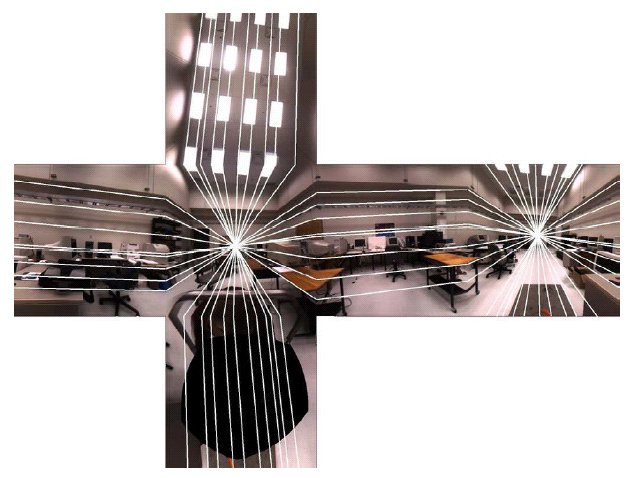

Images of the environement are acquired using the Point Grey Ladybug spherical digital video camera. This multi-sensor system has six cameras: one points up, the other five point out horizontally in a circular configuration. Each sensor is a single Bayer-mosaicked CCD with 1024 x 768 pixels. The sensors are configured such that the pixels of the CCDs map approximately onto a sphere, with roughly 80 pixels overlap between adjacent sensors. |

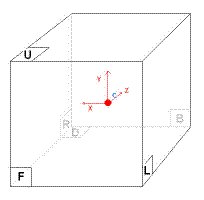

These images must then be combined in order to produce a panoramic image. A 360° panorama is formed by collecting all light incident on a point in space that is to say that a 2D plenoptic function has to be built from the intensity value extracted from the Ladybug sensors. The resulting plenoptic function can then be reprojected on any type of surface. We use here a cubic representation that offers the advantage of being easily manipulable and that can be rendered very efficiently on standard graphic hardware. In addition, the fact that such a cubic panorama is effectively made of six identical planar faces, each of them acting as a standard perspective projection camera with 90° field of views, makes the representation very convenient to handle as all standard linear projective geometry concepts being still applicable.

|

|

In order to produce high-quality panoramas, several problems need to be addressed:

Bilinear Bayer de-mosaicking |

New Adaptive de-mosaicking method |

Original Image |

Retinex enhanced Image |

Mark Fiala,

Immersive Panoramic

Imagery,

in Proc. Canadian Conference on Computer and Robot Vision, pp. 386-391,

Halifax, Canada, May 2005.

Eric Dubois,

Frequency-domain methods

for demosaicking of Bayer-sampled color images,

in IEEE Signal Processing Letters, vol. 12, pp. 847-850, Dec 2005.

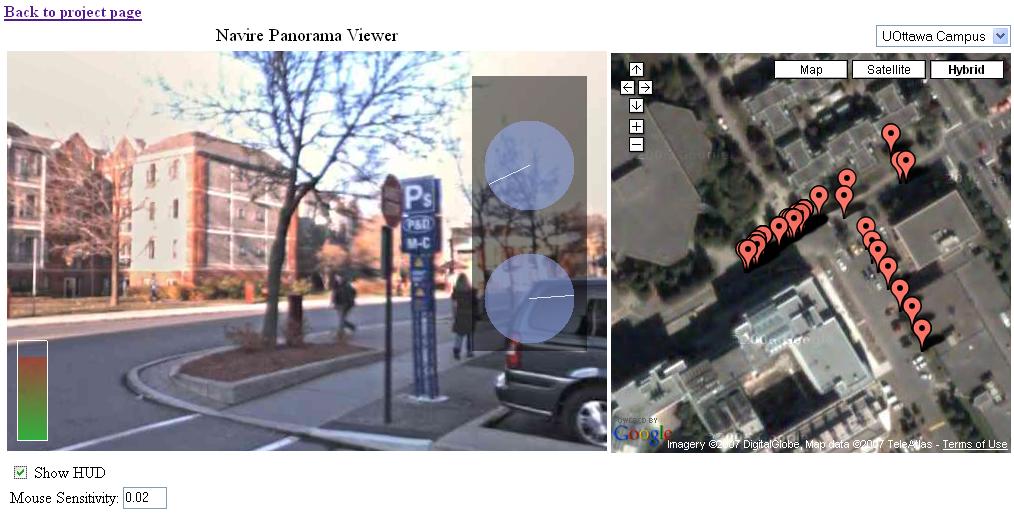

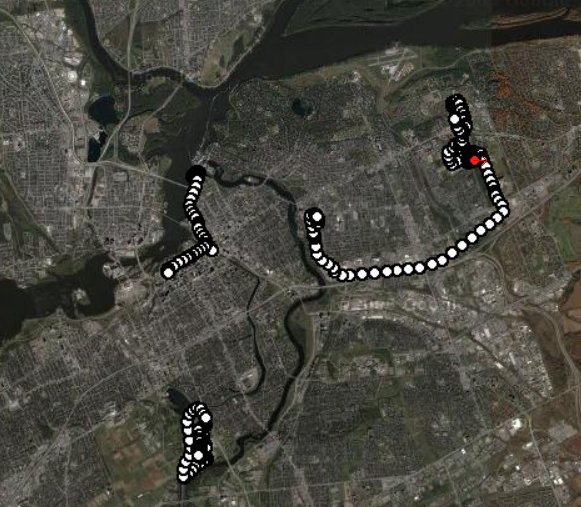

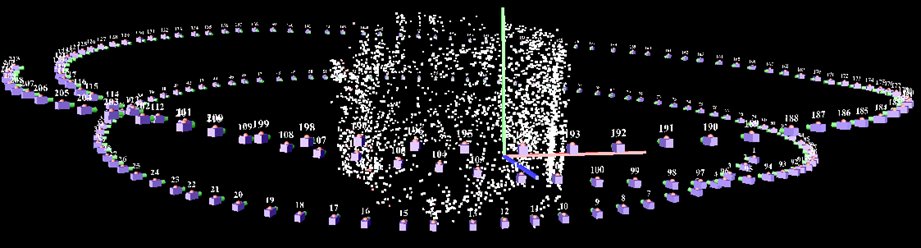

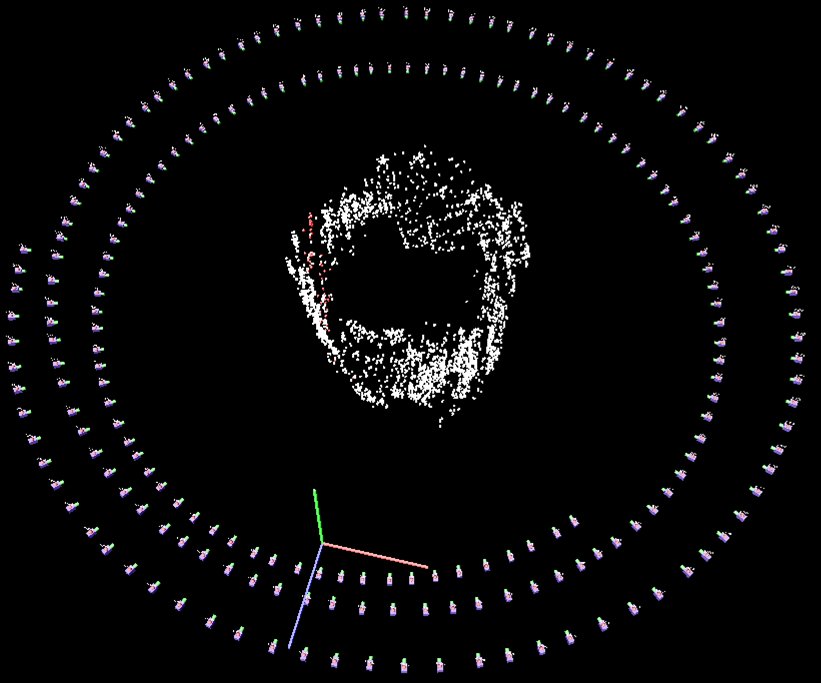

To build a complete image-based representation of a given environements multiple panoramas must be captured. To be able to navigate from one panorama to an adjacent one, the position of each of these panoramas must be known.

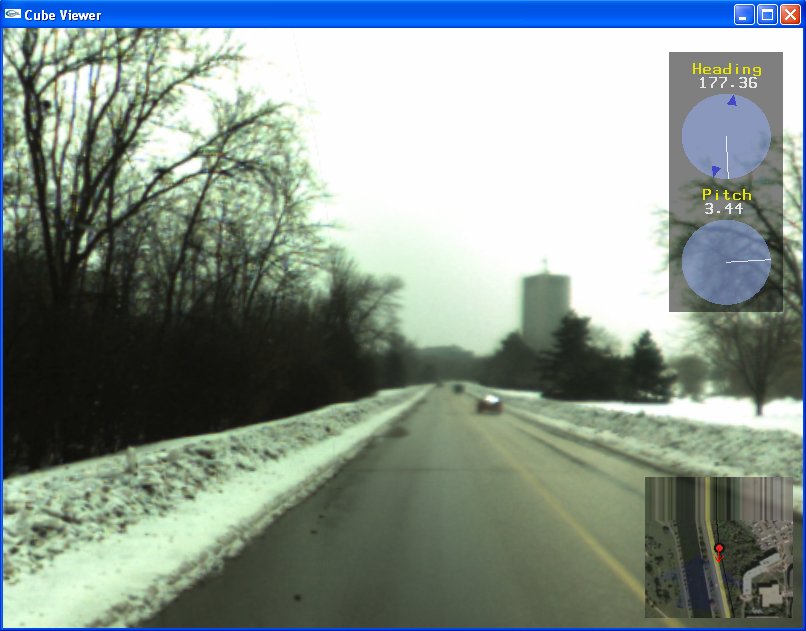

In the case of outdoor environments, one simple solution consists in using a GPS device during the capture process such that each image is associated with an absolute geo-position.

These panoramas need then to be connected in order to produce a navigation graph specifying what panoramas are available from a given point of view. The GPS solution is however not always applicable: accuracy can be insufficient, satellite signal might be lost and for an indoor situation GPS usually doesn't work.

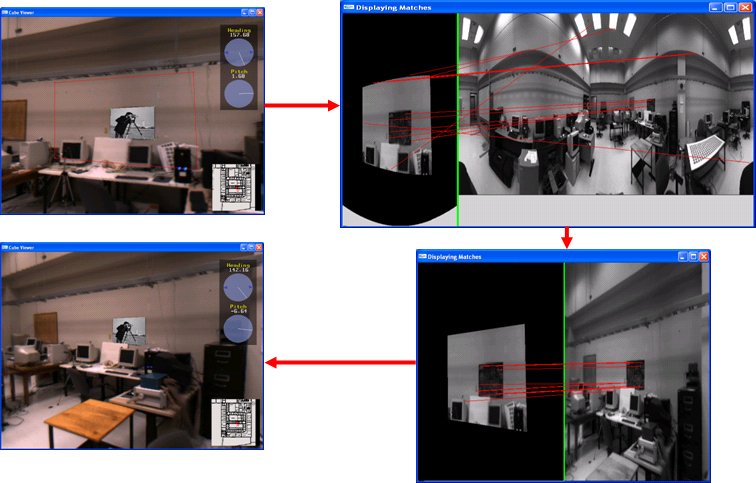

Image MatchingWhen no positional devices are used, the camera positions can be estimated from the observations. To do so, correspondences between the views must be obtained. When a large number of images taken from various view points need to be matches, scale-invariant feature matching constitute an excellent approach. The Hessian-Laplace operator combined with the SIFT descriptor have been selected here. In our comparative studies, this approach has shown both good repeatability and matching reliability.

In addition, the matches between the views can help to determine the locations where the panoramic sequences cross each other along with the orientation of each panorama.

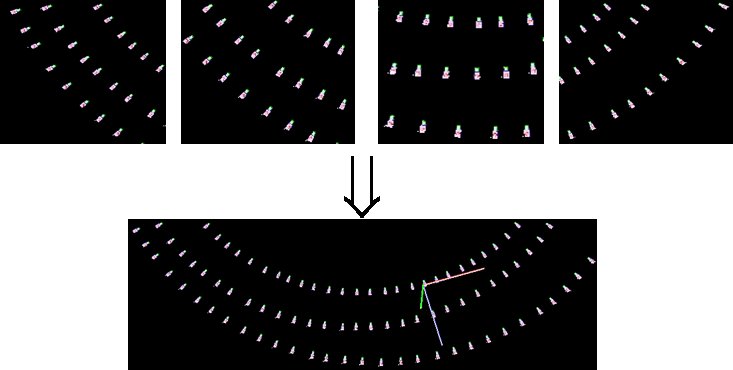

3D ReconstructionOnce an image set has been matched, bundle adjustment techniques can be used to compute the camera position. To garantee the convergence of the estimation process, an iterative procedure is proposed here that:

|

|

|

|

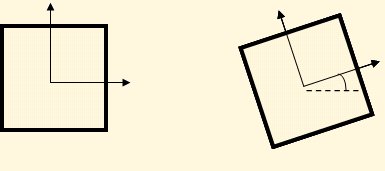

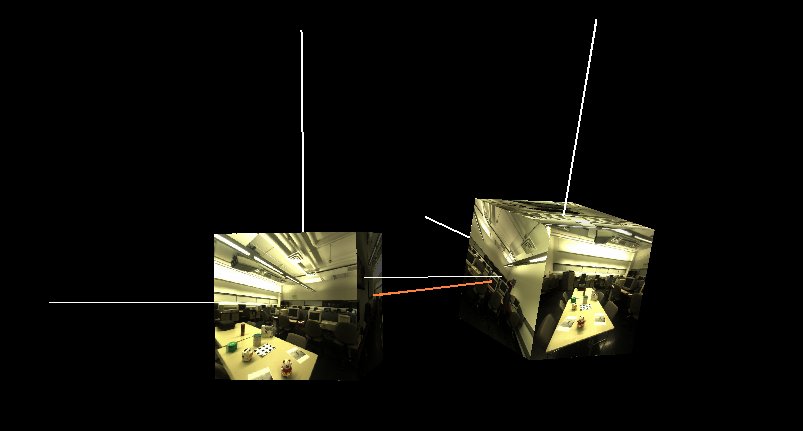

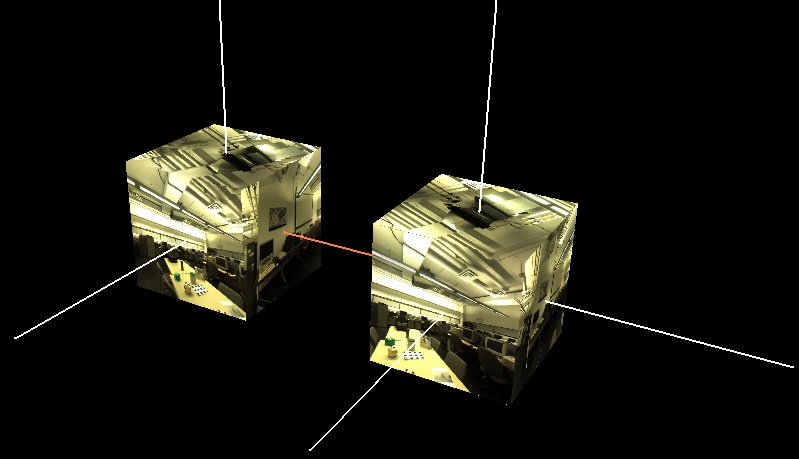

One of the difficulties that occurs when navigating between adjascent panoramas is to make sure that these panoramas are consistently oriented. Indeed, when a user looks at a particular direction and moves to the following panorama, the navigator must display the correct section of the panorama, the one that corresponds to what the user should see when looking in that same direction.

Panorama alignment consists therefore in determining the pan angle between two adjascent cubes. This can be done using feature pointc correspondences between cubes. Indeed, each feature define a direction in the each cube reference frame. When the translation between the two cubes can be neglected, the rotation angle can be found through a least square minimization process that align the directional vectors given by a set of feature matches.

In a more general setup, the essential matrix between cube pair can be used to align the cubes. Once this essential matrix estimated form the set of matches, the rotational and translational components can be obtained through decomposition. This information can also be used to rectify the cubes: in the context of cubic panorama, rectification means regenerating the cubic representation by applying rotations that will make parallel all corresponding rectified cubes' faces.

Original Cube Images |

Rectified Cube Images |

Mark Fiala,

Automatic Alignment and

Graph Map Building of Panoramas,

in IEEE International Workshop on Haptic Audio Visual Environments and their

Applications, pp. 103-108, Oct. 2005.

Florian Kangni and Robert Laganière,

Epipolar Geometry for

the Rectification of Cubic Panoramas,

in Canadian Conference on Robot Vision, June 2006.

One of the difficulties that occurs when navigating between adjascent panoramas is to make sure that these panoramas are consistently oriented. Indeed, when a user looks at a particular direction and moves to the following panorama, the navigator must display the correct section of the panorama, the one that corresponds to what the user should see when looking in that same direction.

L. Zhang, D. Wang, and A.Vincent, L. Zhang, D. Wang and A. Vincent,

Adaptive

reconstruction of Intermediate Views from Stereoscopic Images,

in IEEE Trans. on Circuits and Systems for Video technology, 2005.

If the user of the navigation system is located remotely over a network, the video sequence corresponding to the virtual camera output must be efficiently compressed for transmission to the user. The compression can make use of international standards for video compression to allow standard software modules to do the decompression. However, the virtual video has many special features that can be exploited by the compression system.

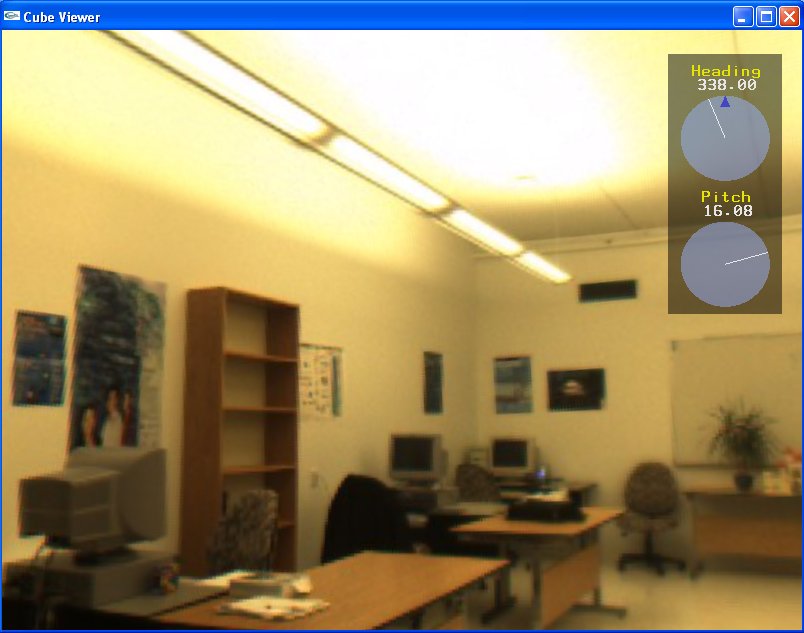

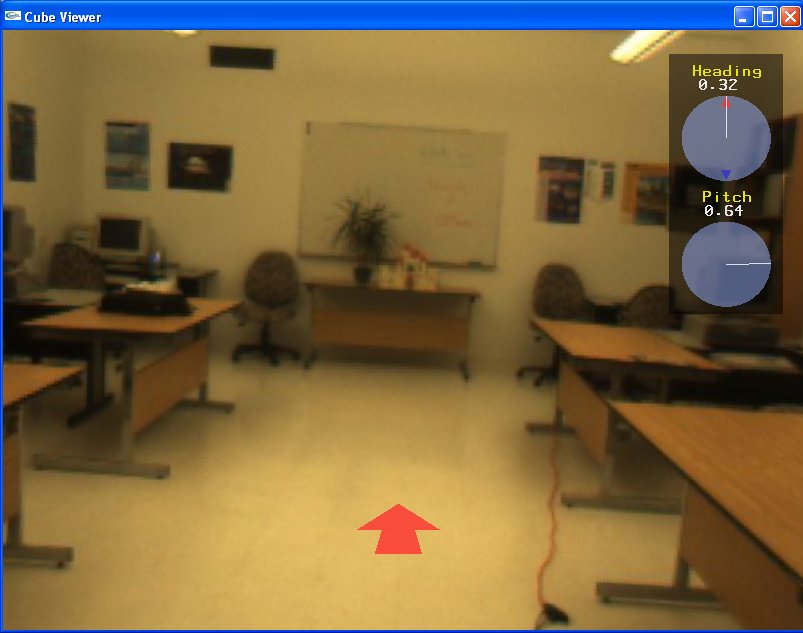

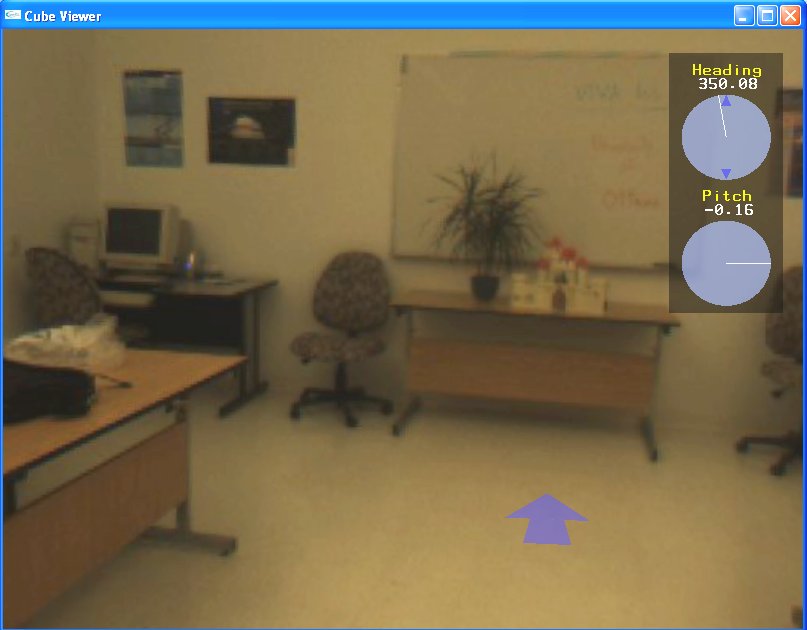

Cubic panoramas are displayed in a Cube Viewer, which uses accelerated graphics hardware to render the six aligned images of the cube sides. The 360° view orientation is controlled in real-time using standard input (ie: mouse and keyboard) or an Intersense inertial tracker3 mounted on a Sony i-glasses4 HMD. The current pitch and heading of the user is displayed on the interface, as well as a 2D map of the environment, when available. The environment consists of a number of panorama locations connected in a graph topology.

inside the remote environment. |

can move in this direction. |

one panorama to another. |

|

The objective here is to add the capability of adding virtual objects into the NAVIRE panoramic scenes

Derek Bradley, Alan Brunton, Mark Fiala, Gerhard Roth,

Image-based

Navigation in Real Environments Using Panoramas,

in IEEE International Workshop on Haptic Audio Visual Environments and their

Applications, Oct. 2005.

Humans have difficulty navigating/learning in virtual environments relative to the real world. The rendering of virtual environments may be inadequate to represent subtle visual cues required for efficient navigation/learning. In this study, we want to empirically assess navigation/learning ability in two virtual environments that differ in the quality of their visual rendering