|

| Registration of Range Measurements | ||||||||

| Participants Phillip Curtis M.A.Sc. student 2003-2005 Changzhong Chen M.A.Sc. student 2000-2002 Dr. Pierre Payeur SITE, University of Ottawa Collaborators Natural Sciences and Engineering Research Council of Canada Canadian Foundation for Innovation  Ontario Innovation Trust |

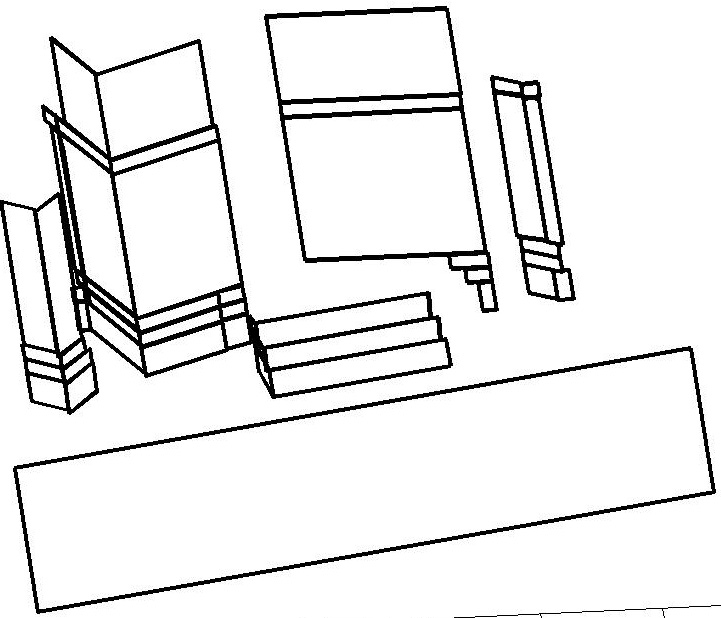

As physical sensors cover a limited field of view, a relatively large number of viewpoints must generally be visited

to obtain a complete estimate of the structure of a complex object. Merging the measurements collected from every viewpoint

implies that a system is able to keep the track of the sensor position and orientation. This is required to ensure that range

measurements are merged in a coherent model without introducing undesirable distortion. Unfortunately, this is hardly

achievable with high accuracy without the use of sophisticated and expensive pose estimation devices, such as coordinate

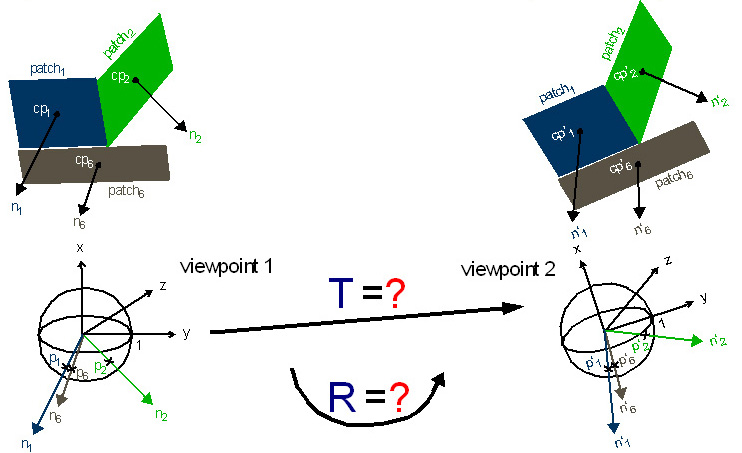

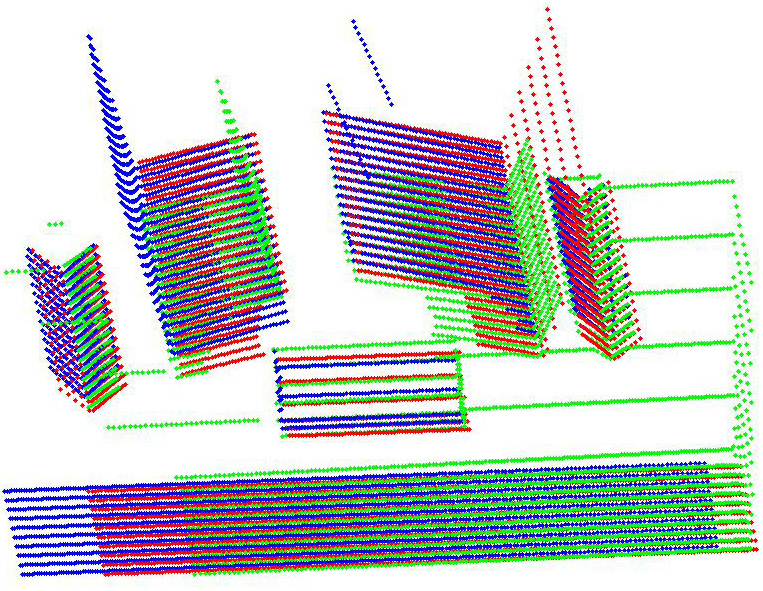

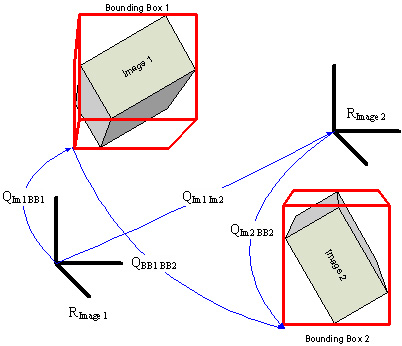

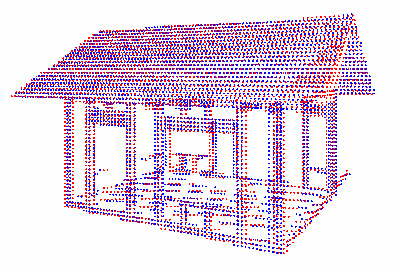

measuring machines (CMM) or visual trackers. Given these limitations, there are major advantages at developing new methods to estimate the relative position and orientation transformations between successive viewpoints that rely only on the raw range measurements that have been collected. While feature-based approaches have investigated for long, even for 3D datasets, there are still several issues to be addressed to reach reliable solutions. Those problems include the definition of features and their extraction from 3D point clouds, the matching problem, and more importantly, the requirement to provide an initial estimate of the correct translation and orientation parameters in order for the current methods to avoid convergence toward local minima. This research work aims at the development of more autonomous registration techniques that directly exploit range measurements obtained by commercially available sensors to generate accurate estimates of registration parameters, with minimal a priori knowledge about the actual registration parameters, and within limited computing time, as required by autonomous robotic systems. Two different strategies have been investigated until now. The first one consists of a technique based on an intermediate compact spatial representation of planar patches extracted from the range measurements. 3D points are initially clustered in a set of planar sections that best fit the objects found in the environment. The set of planar sections is compactly encoded as a gaussian spherical map to speed up computation. Assuming sufficient overlap between successive scans, a search for the best estimates of rotation and translation parameters is performed over the gaussian spherical maps in two phases, until the error between the sets of planar patches respectively extracted from the two point clouds to register is minimized. Experimentation with both simulated and real range scans collected on everyday life objects demonstrated the potential of the technique to provide very good estimates of the registration parameters without any initial knowledge.

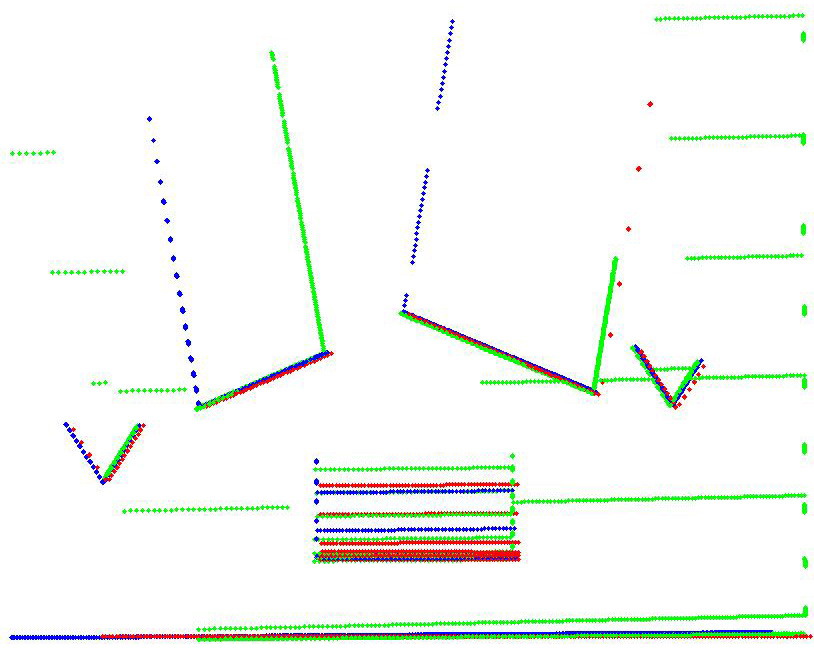

The second strategy builds upon a transformation to the frequency domain of the range measurements distribution. Doing so, the extraction of rotation parameters is decoupled from that of translation parameters. Parameters estimation is entirely performed in the frequency domain. First the location of an axis of rotation in 3D space is extracted, along with the magnitude of that rotation between two separate but overlapping 3D point clouds. The translation between the viewpoints is estimated in a second phase. Unlike similar frequency-domain approaches, the proposed solution minimizes the number of Fourier transforms required between 3D space and the frequency-domain representations. Experimentation with simulated and real range scans collected with a laser scanner on complex objects proved that the technique provides registration parameters accuracy that compares advantageously to classical iterative approaches, but without the need for any initial estimate.

|

Related Publications

|

© SMART Research Group, 2009