3D Face Cloning from orthogonal photographs

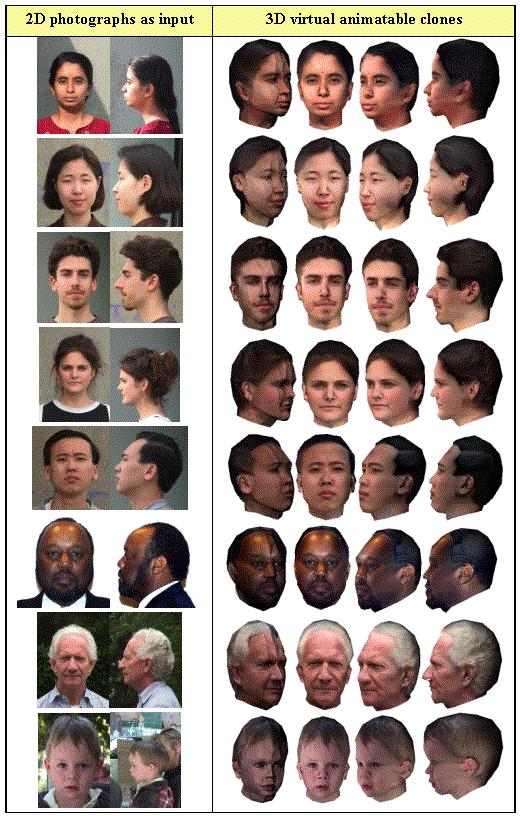

It utilizes a modification of a generic model after detection of

feature

points on picture data and generate texture mapping including fully

automatic

texture image generation. The methods for cloning are able to make 3D

animation

of a face from orthogonal picture data in a few minutes (practically

about

1~5 minutes).

2D photos offer clues to the 3D shape of an object. It is not feasible,

however, to consider 3D-points densely distributed on the head. In most

cases, we know the location of only a few visible features such as

eyes,

lips and silhouettes, the key characteristics for recognizing people,

which

are detected in a semiautomatic way using the freeform deformation and

then the structured snake method with some anchor functionality for a

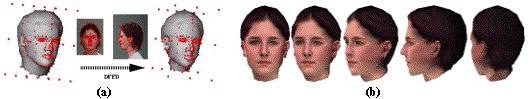

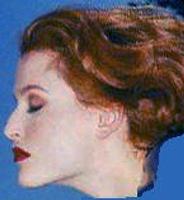

subset of feature points, say key features. Figure 1(a) depicts an

orthogonal pair of normalized images, showing the detected features.

The two 2D sets of position coordinates, from front and side views,

i.e.,

the (x, y) and the (z, y) planes, are combined to give a single set of

3D points. The problem is how to deform a generic model, which has more

than a thousand points to make an individualized smooth surface. One

solution

is to use 3D feature points as a set of control points for a

deformation.

Then the deformation of a surface can be seen as an interpolation of

the

displacements of the control points. In the Dirichlet-based FFD

approach,

any point of the surface is expressed relative to a subset of the

control

points set with the Sibson coordinate system. Therefore DFFD is used

here to get new geometrical coordinates for a modification of the

generic head on which are situated the newly detected feature points.

The correct shapes of the eyes and teeth are assured through

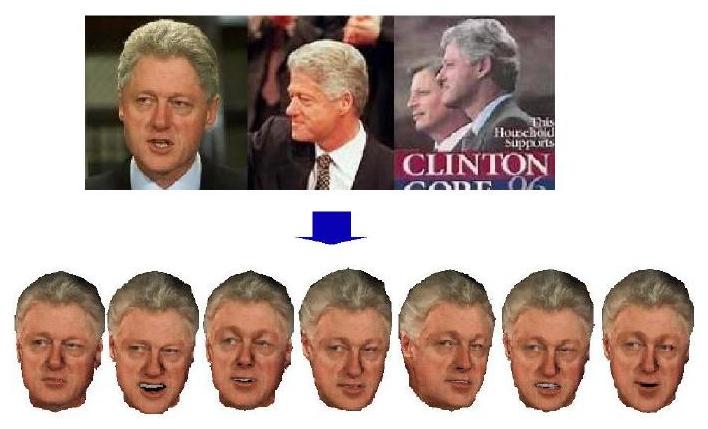

translation and scaling appropriate to the new head. As shown in Figure

1(b), the result is quite respectable when we consider the input data

(pictures from only two views). To improve the realism, we make use of

automatic texture mapping with texture generation together.

Figure 1: (a) Modification of a generic head according to

feature

points detected on pictures. Points on a 3D head are control points for

DFFD. (b) Snapshots of a reconstructed head in several views.

Texture mapping serves not only to disguise the roughness of shape

matching

determined by a few feature points, but also to imbue the face with

more

realistic complexion and tint. If the texture mapping is not correct,

the

accurate shape is useless in practice. We therefore use information

from

the set of feature points detected to generate texture in a fully

automatically,

based on the two views. The main criterion is to obtain the highest

resolution

possible for most detailed portions. We first connect two pictures

along

predefined feature lines using geometrical deformations and, to avoid

visible

boundary effects, a multiresolution technique. We then obtain

appropriate

texture coordinates for every point on the head using the same image

transformation.

Figure 1(b) shows several views of the head reconstructed.

WonSook LEE, Ph.D.

WonSook LEE, Ph.D.

School of Information Technology and Engineering (SITE)

University of Ottawa

800 King Edward Avenue

P.O. Box 450, Stn A

Ottawa, Ontario, Canada K1N 6N5

Office: SITE, Room 4065

Tel: (613) 562-5800 ext. 6227

Fax: (613) 562-5664

Email: wslee@site.uottawa.ca

Web: http://www.site.uottawa.ca/~wslee

WonSook LEE, Ph.D.

WonSook LEE, Ph.D.