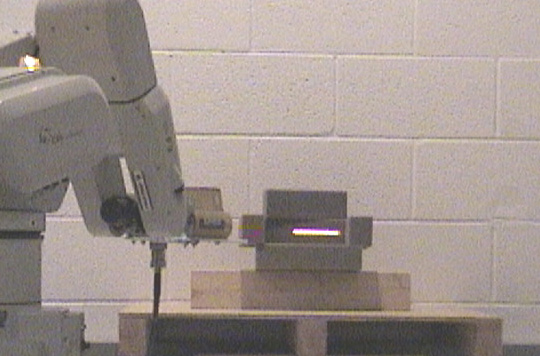

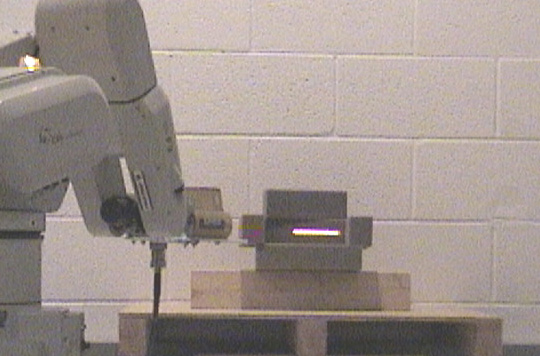

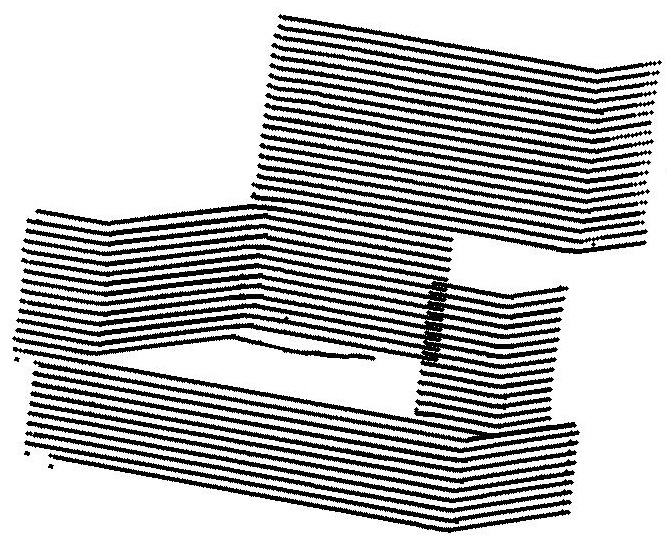

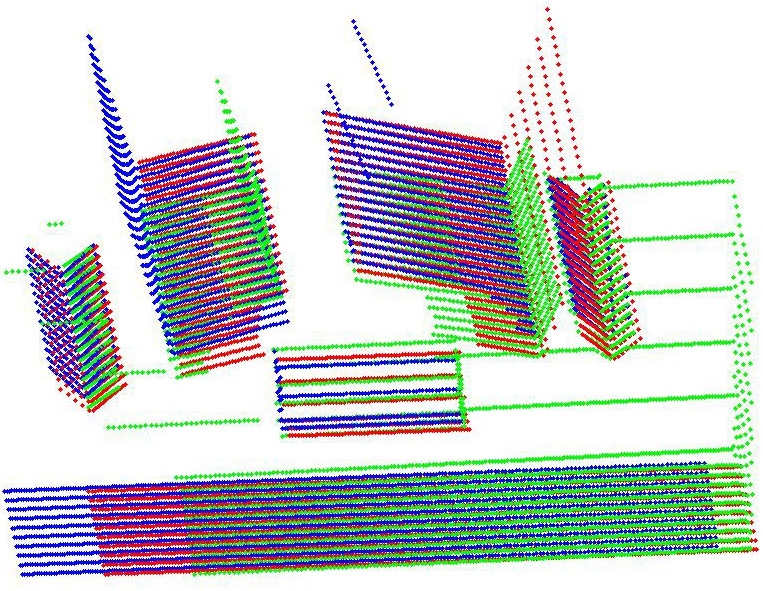

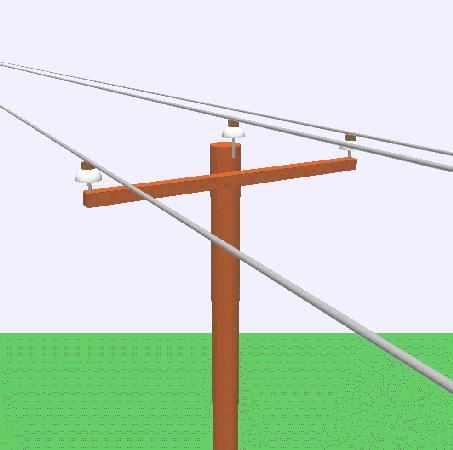

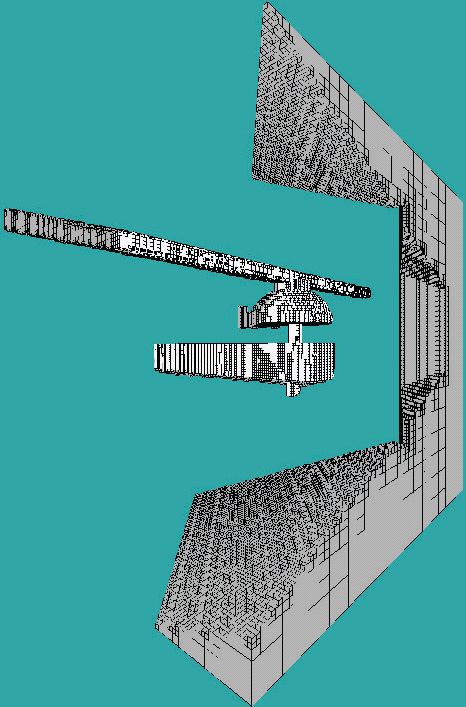

Scanning with a laser range finder

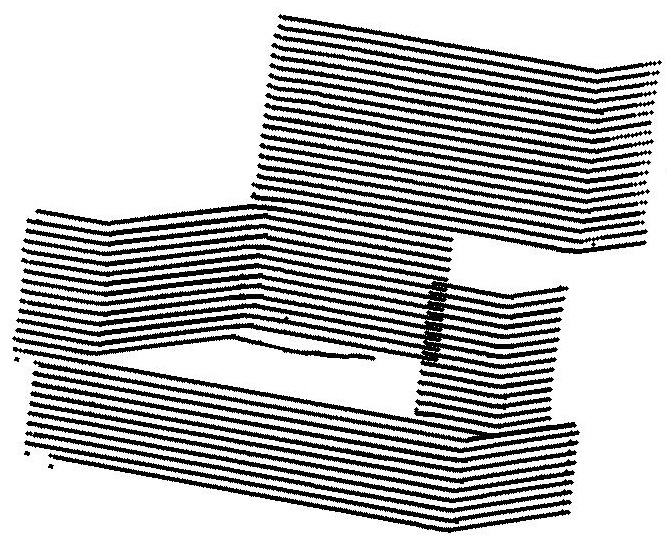

Raw range measurements

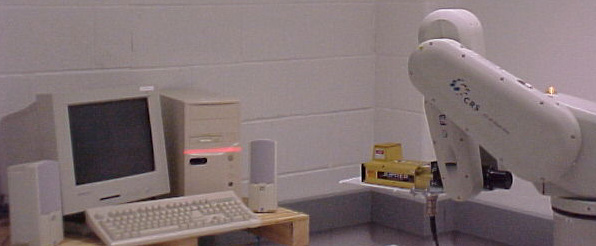

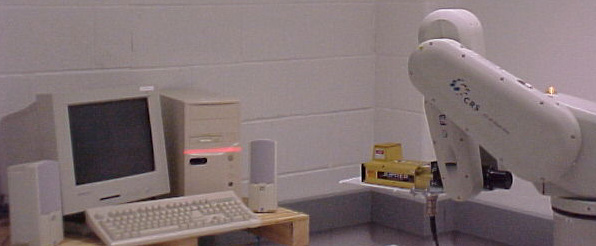

Collecting range measurements

Research Interests:

|

Intérêts de recherche :

|

|

Range data acquisition consists in collecting information on tridimensional scenes with active sensors

and in merging data in a consistent way to allow extraction of relevant characteristics of an environment. The problems studied here are related with the use, the development and the automation of range sensing strategies that will provide a complete coverage of large and complex objects as required in the context of computer vision applied to robotic and autonomous systems operation. Current projects include the development of an integrated sensing setup that takes advantage of a serial arm manipulator to move a laser range finder around complex scenes for which occupancy models need to be computed. Minimal interaction of human operators and reduction of scanning times are the main objectives that are pursued. |

| Principal investigator: Pierre Payeur |

| Participant: Phillip Curtis, Christopher Yang |

Scanning with a laser range finder |

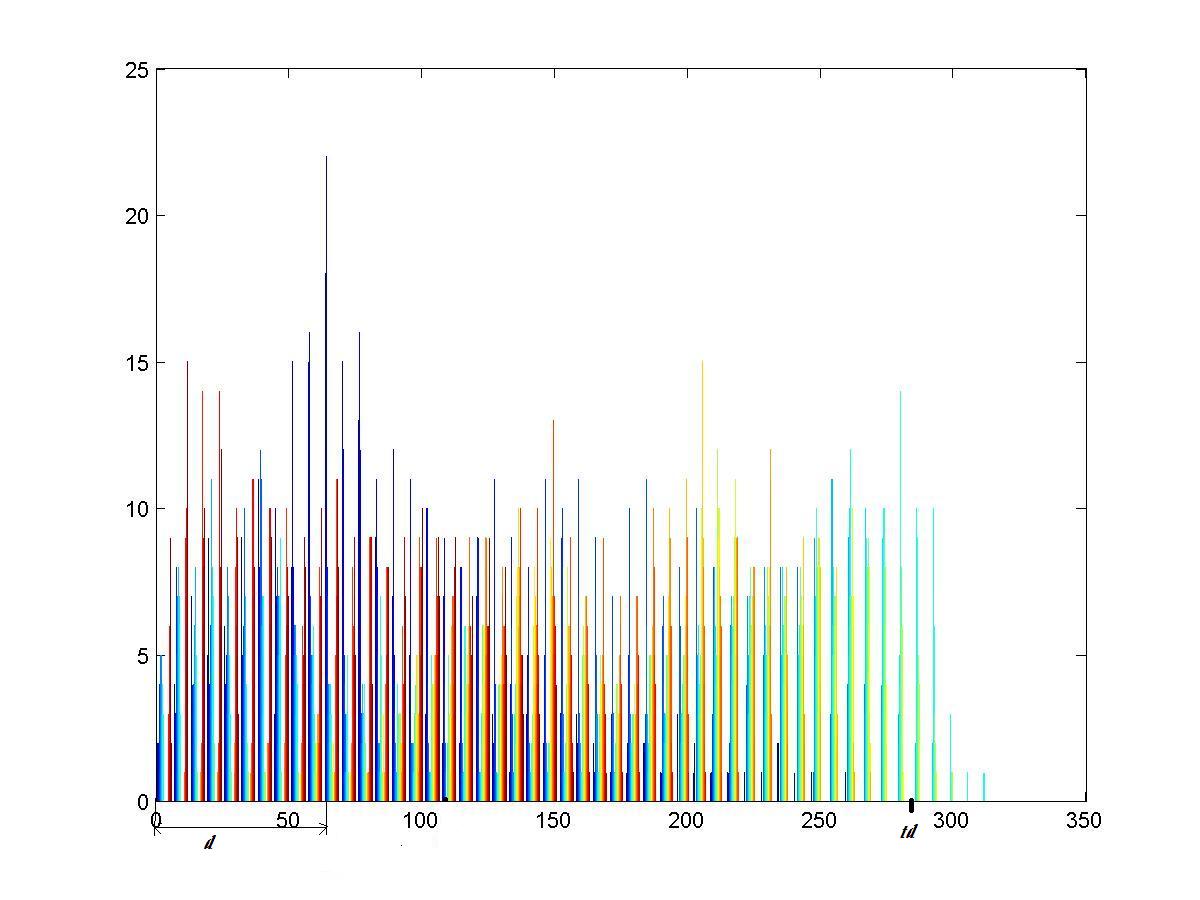

Raw range measurements |

Collecting range measurements |

|

3D objects shapes generate important challenges in data acquisition as active range sensing technologies

are highly sensitive to the orientation and shininess of surfaces. On the other hand, stereoscopic vision

heavily depends on lighting conditions and surface textures. The problems examined here are related with the combination of both range (depth) and illuminance (intensity of light) imaging technologies in order to combine their respective strengths to achieve maximum robustness in 3D data acquisition as required for robotic systems that operate in harsh environments. Current projects include the calibration of range finders with stereoscopic sensors as well as the examination of the complementarity between laser range and stereoscopic vision sensing techniques to enhance data acquisition robustness in realistic industrial robotic environments. |

| Principal investigator: Pierre Payeur |

| Participant: Christopher Yang, Danick Desjardins |

|

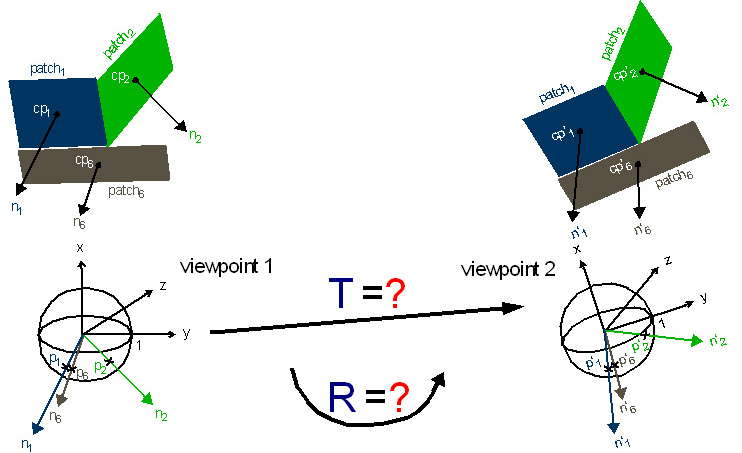

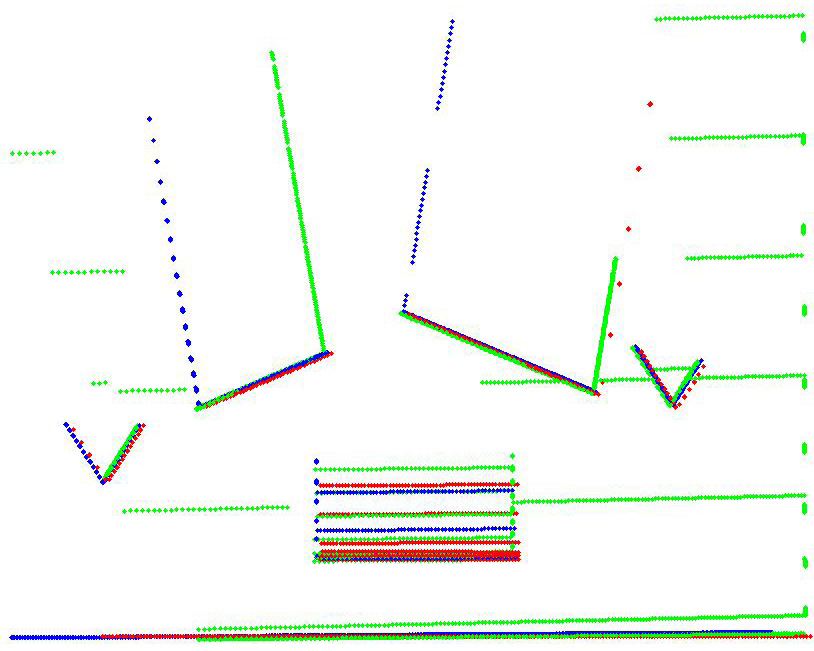

As physical sensors have a limited field of view, a relatively large number of viewpoints must generally be

visited to obtain a complete estimate of the structure of a complex scene. Merging the measurements collected

from each viewpoint implies that a system is able to keep the track of the sensor position and orientation.

Unfortunately, this is hardly achieved in practical systems. Given these limitations, it is important to develop means to estimate the relative position and orientation transformations between successive viewpoints in order to ensure that raw data can be merged in a constructive way. Unfortunately, most of the existing approaches use sophisticated intermediate representations of the measurements to estimate these transformations. This imposes severe limitations for real-time robotic operations. Current projects on data registration aim toward the development of simpler and more efficient registration techniques that directly exploit range measurements obtained by commercially available sensors to generate accurate estimates of registration parameters within very limited computing time as required by autonomous robotic systems. |

| Principal investigator: Pierre Payeur |

| Participant: Changzhong Chen, Phillip Curtis |

Estimating transformation parameters |

Segmented range measurements |

Superposition of registered surfaces |

Superposition of registered surfaces |

|

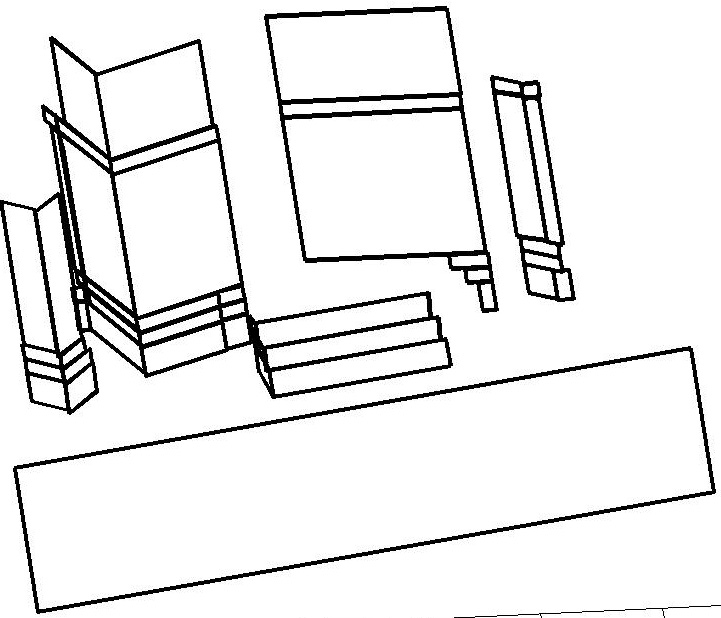

Determining the movement of a robot implies a detailed knowledge of the objects located in its workspace.

Workspace modeling is therefore a critical issue in any path planning and collision avoidance system. The problem of 3D space modeling from range or illuminance images includes many aspects starting from the registration of the raw data, the fusion of measurements originating from different sensors or different viewpoints and the determination of a suitable representation according to a specific task, up to the optimal rendering of the resulting model and ultimately the interpretation of the model that will allow decision making. Current projects in modeling aim at defining optimal types of environment representation and manipulation in the context of telerobotic operation in remote scenes. The question of data fusion is also extensively examined along with the problem of processing optimization that is required for real-time applications. |

| Principal investigator: Pierre Payeur |

| Participant: Benoît Bolzon, Bassel Abou-Merhy |

Typical experimental 3D scene |

Occupancy grid model |

Occupancy grid model |

Complex 3D scene |

Occupancy grid model |

Occupancy grid model |

|

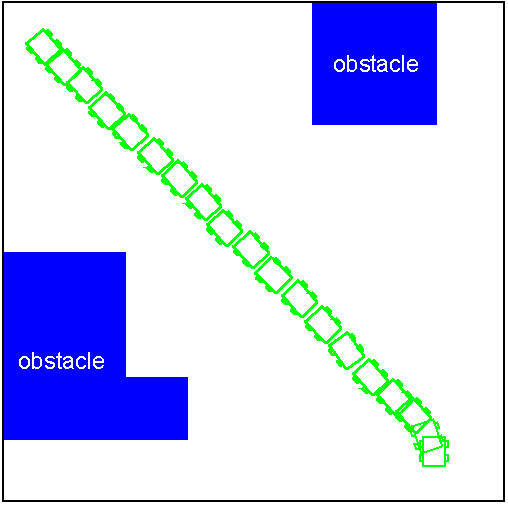

Path planning and collision avoidance in robotic applications is the process that allows to determine a

safe trajectory for a mobile device to circulate among obstacles. It also implies a level of optimization

that will minimize travelling time while ensuring that the robot reaches destination. In a general context, path planning for robots remains an unsolved problem that preempts the development of fully autonomous sytems. Our research examines the potential of new approaches based on a probabilistic description of the robot's workspace cluttering. The studies are conducted both in 2-D and 3-D spaces with the goal of defining robust path planning strategies that would be able to deal with various environments. Current projects examine how discrete potential fields can be computed directly from probabilistic occupancy grids representing complex environments. The management of fine interactions between a serial manipulator and selected objects in the scene is also investigated as a part of the path planning operation. |

| Principal investigator: Pierre Payeur |

| Participant: Martin Soucy |

Collision free trajectory |

Repulsive potential field |

Attractive potential field |

|

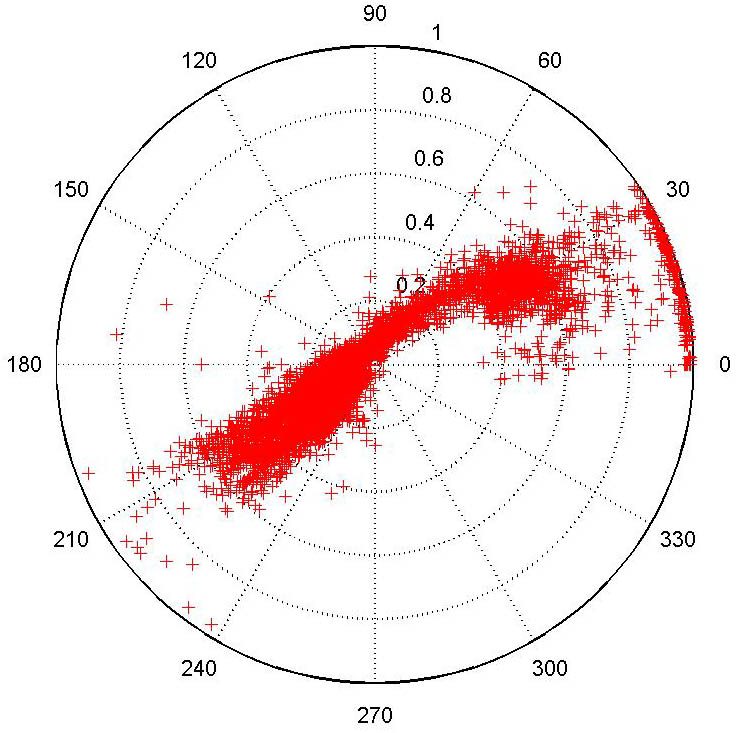

Computer vision techniques can find a broad range of applications in decision making systems used to control

mechanisms with various levels of autonomy. However, extracting relevant information from gray level or color

images still remains an important challenge. It is the goal of our research projects on the application of computer vision for autonomous systems to develop image processing algorithms that are suitable for different types of systems finding real applications in everyday life. The main objective is to develop more robust systems that will be used to interact in a completely or partially automatic way with human beings. The number of possible applications is therefore extremely vaste and diversified. Current projects include the development of image-based movement detection systems that are robust to outdoor environment conditions (lighting and weather variations). For now, the main focus of development for these systems has been for traffic management and optimization. |

| Principal investigator: Pierre Payeur |

| Participant: Yi Liu |

Histogram of a traffic scene |

HSV map on a rainy day |

Traffic detection on a sunny day |

Traffic detection over a snowy night |