GestureVue: A human computer interaction project

Posted by Pavel Popov

Introduction

There are many applications

for gesture recognition. Interaction with machines can be simplified and streamlined using intuitive human gestures that mimic

motions that humans perform outside of computer interaction. For example scrolling through a website can be done by gesturing in a manner

similar to the motion that is required drag a piece of paper on a desk. The problem becomes how do we recognize hands? Using a variety of methods this

project aims to improve the current state of the art of hand recognition and to use these improvements to produce stable methods

to interact with computers using gestures.

There are many applications

for gesture recognition. Interaction with machines can be simplified and streamlined using intuitive human gestures that mimic

motions that humans perform outside of computer interaction. For example scrolling through a website can be done by gesturing in a manner

similar to the motion that is required drag a piece of paper on a desk. The problem becomes how do we recognize hands? Using a variety of methods this

project aims to improve the current state of the art of hand recognition and to use these improvements to produce stable methods

to interact with computers using gestures.

Goals of this project

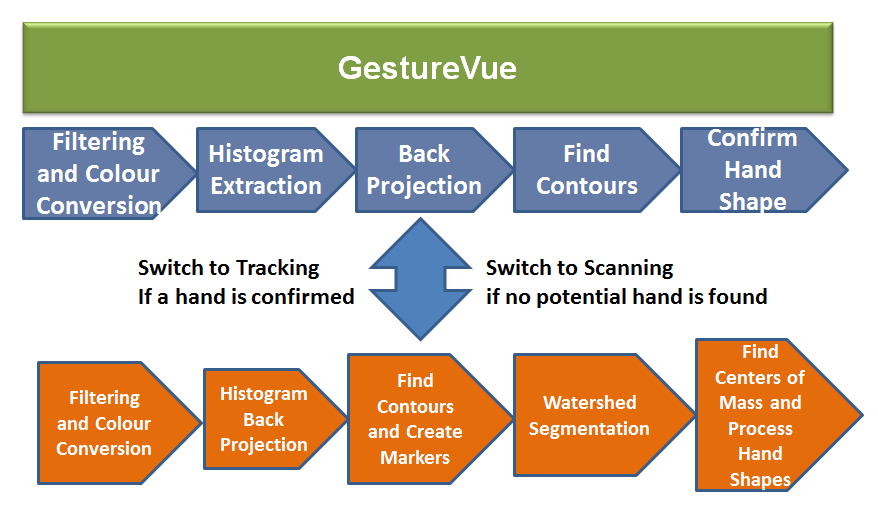

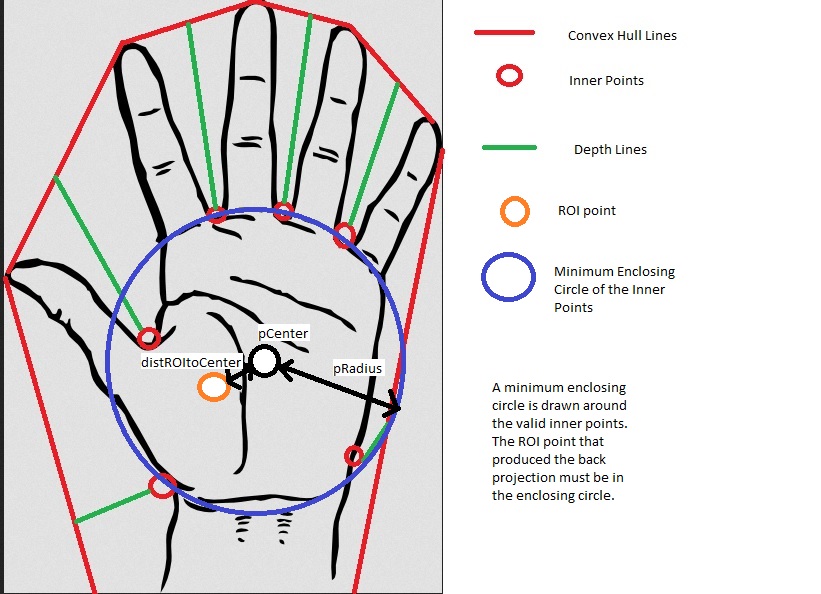

- Scanning for Hands: The first goal of this project is to accurately scan for hands. This is done with back projection and shape analysis. The goal of the algorithm is to be robust enough to extract hand shapes even in the presence of noisy and varying backgrounds. Many competing hand scanning algorithms use background subtraction in the presence of uniform and static backgrounds. These are not robust enough to ensure universal usability, or at least broad usability. The scanning also must be discriminating enough to ensure that there are almost no false positives. Lastly, the scanning must also be fast.

- Tracking Hands: This algorithm should provide stable tracking for the hands that were found the the previous algorithm, and reliably register their orientation and how many digits are visible. Noise between sucessive frames should be kept to a minimum.

- Processing a gesture: The recieved hand positions and the orientation of the associated fingers will be processed to produce meaningful interactions with a computer. One of the applications that has been conceived is to control a computer mouse using these gestures.

Algorithm Methodology